This is a summary of our own, editor's award-winning article.

Nvidia (NVDA) spotted the opportunity for parallel processing in training LLMs (Large Language Models) early on and has been diligently working to establish a compelling competitive advantage.

This competitive advantage doesn’t just rely on the superiority of its chips, it also relies on the network technology that combines GPUs and memory chips and its proprietary software development platform CUDA which enables accelerated parallel computing.

Unseating Nvidia requires companies to gain advantage on all three dimensions simultaneously, which is very difficult as Nvidia benefits from first-mover advantage and some network effects.

The huge processing and memory need of LLMs

Training an LLM like ChatGPT 4 requires huge resources, it can't be done on a single chip, no matter how powerful, or even a single server as it involves shifting massive amounts of data, 10K-28K Nvidia GPUs and servers, memory, etc., hence the exploding demand for its chips.

GPU advantage

In a way, Nvidia was a little lucky when it had the best GPU around 2017 when Google introduced its transformer model which was the first generative AI model, but it has taken full advantage of that first-mover advantage.

Hyperscalers are developing their own chips like Google with Tensor chips, Microsoft with Maia, and Amazon with Trainium, but these are for internal use in their own data centers driven by the cost and scarcity of Nvidia's chips, they don't sell them to third parties and they still use a huge amount of Nvidia chips

Intel with Gaudi 2 and AMD with the Mi300X offer some competition, especially AMD's chip can hold its own against Nvidia's chips but AMD seriously lags in the two other domains (networking and software) to pose a threat to Nvidia's dominance.

AMD will likely gain some market share due to the scarcity of Nvidia chips.

Nvidia is upping the ante with the arrival of Blackwell, keeping one step ahead of the competition.

Upstarts

GPUs weren't designed for training or running LLMs so a design from scratch could potentially pay off big time.

One particular limitation is the memory wall, as AI chips spend much if not most of the time shuffling data in and out of memory, given the huge and increasing size of data involved in training and running large AI models.

Memory has advanced much slower than the GPUs so the memory wall has gotten higher, even if this is somewhat mitigated by the fact that as large AI models get bigger, their demands on compute power increase relative to their memory demands. There are several new companies offering new ways to deal with the memory wall, Cerebras and SambaNova are the most promising ones in our view.

Cerebras has produced a wafer-sized chip that can train LLMs up to 10x the size of ChatGPT 4 and Gemini, which reduces the training from weeks to hours.

SambaNova's AI chip, the SN40L is enveloped into a whole new architecture called RDA (Reconfigurable Dataflow Architecture), liberating AI from the constraints of traditional software and hardware, overcoming the huge demands on memory which management believes will usher in a new era of computing.

There are others like Groq, Graphcore, Esperanto, Syntiant and EnChargeAI, each with their advantages and disadvantages.

Then there is the Complementary-Transformer AI chip from KAIST (Korea Advanced Institute of Science and Technology), which claims to have cracked the field of neuromorphic computing.

Networking

As LLMs run on hundreds or thousands of GPUs and shuffle huge amounts of data in and out of memory, the speed of the links between all these chips matters a great deal (the memory wall).

A cluster of just 16 GPUs spend 60 percent of their time communicating with one another and only 40 percent actually computing.

Nvidia has a competitive advantage also here and is speeding things up significantly with the new Blackwell Quantum-X800 Infiniband and Ethernet platform, pushing it to end-to-end 800Gb/s throughput, increasing bandwidth capacity by 5x.

There is also a new NVLink switch which lets 576 GPUs talk to each other, with 1.8 terabytes per second of bidirectional bandwidth. That required Nvidia to build an entire new network switch chip, one with 50 billion transistors and some of its own onboard compute: 3.6 teraflops of FP8

CUDA

CUDA is a proprietary parallel computing platform and programming interface model created by Nvidia for the development of software that is used by parallel processors.

CUDA predates generative AI and was developed from the ground up to be accessible to a broad audience of developers, being optimized continuously without sharing insights with competitors, resulting in CUDA applications seeing much better performance and efficiency gains on Nvidia hardware compared to vendor-neutral solutions like OpenCL.

So Nvidia had a double first-mover advantage with its GPUs and CUDA, creating a virtuous cycle

Alternative platforms (AMD's MIOpen and Intel's oneAPI) have come to little as they suffer from a chicken and egg problem, a limited base creates insufficient incentives to close the gaps in tools and libraries on CUDA, which keeps the base limited.

There are many other initiatives here, from PyTorch, ROCm, Triton, oneAPI, Lambda Labs, JAX, Julia, cloud-based abstraction layers like Microsoft Cognitive Toolkit, ONNX Runtime and WinML, and more that each addresses aspects and offers alternatives.

While one-for-one CUDA alternatives struggle to gain traction, there are two threats to CUDA's dominance; interoperability and portability, with solutions like PyTorch 2.0 and Open AI Triton, help to achieve this (see original article for the rather technical details).

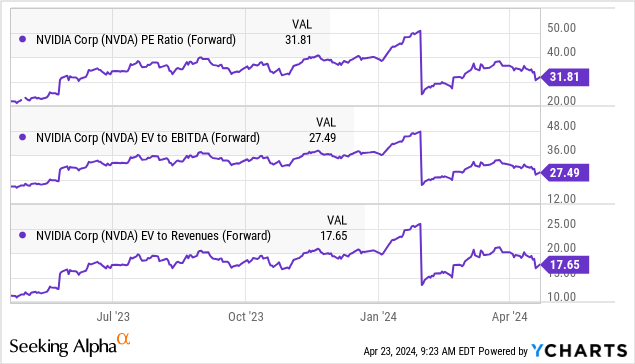

Valuation

While at $880 (at the moment of writing), the valuation of NVDA shares can only be described as rich (forward multiples are more palatable though), likely, we're still in the early innings of the generative AI buildout.

Valuation isn't based on air either:

Conclusion

Nvidia's dominant position is likely to remain as it's multifaceted with the quality of its chips, networking and the large userbase of its software has to be broken simultaneously.

There are nevertheless two threats that could slowly erode some of its dominance, as the scarcity and cost of Nvidia chips provide ample incentives to look for alternatives.

The first is new chip designs purposely built for AI that offers a quantum leap in performance, we find the solutions of Cerebras and SambaNova particularly interesting here.

The second threat comes from a gradual move towards interoperability and portability of software, which emerging solutions like PyTorch and Open AI Triton enable.

Still, so far Nvidia has managed to keep one step ahead of the competition, and there are signs they are still doing that with the new Broadwell chips.

While certainly not cheap, we still see Nvidia chips higher in 12-18 months, given the tremendous demand (far outstripping supply), bar some major market mayhem.