Embedded AI Is The Next Big Thing

Surfing two big trends (AI + Robotics) combined delivers humanoid robots..

Even in very volatile markets or bear markets, there are always opportunities, especially if they are carried by super strong tailwinds like AI. And how about two super-strong tailwinds combined?

While the AI revolution is getting all the limelight, the robotics revolution has been quietly moving forward, but this is about to change as both technologies are being merged, which produces humanoid robots that can be interacted with, assess and recognize their environment, respond to voice commands, and learn with simulated or real environments, rapidly getting better all the time.

There are still some limits, but things are moving fast, and the implications for society are absolutely huge; there will be a lot of upheaval. But fortunes will be made as well, so we’re going to survey the investment landscape of this convergence of robots and AI. First, a history lesson.

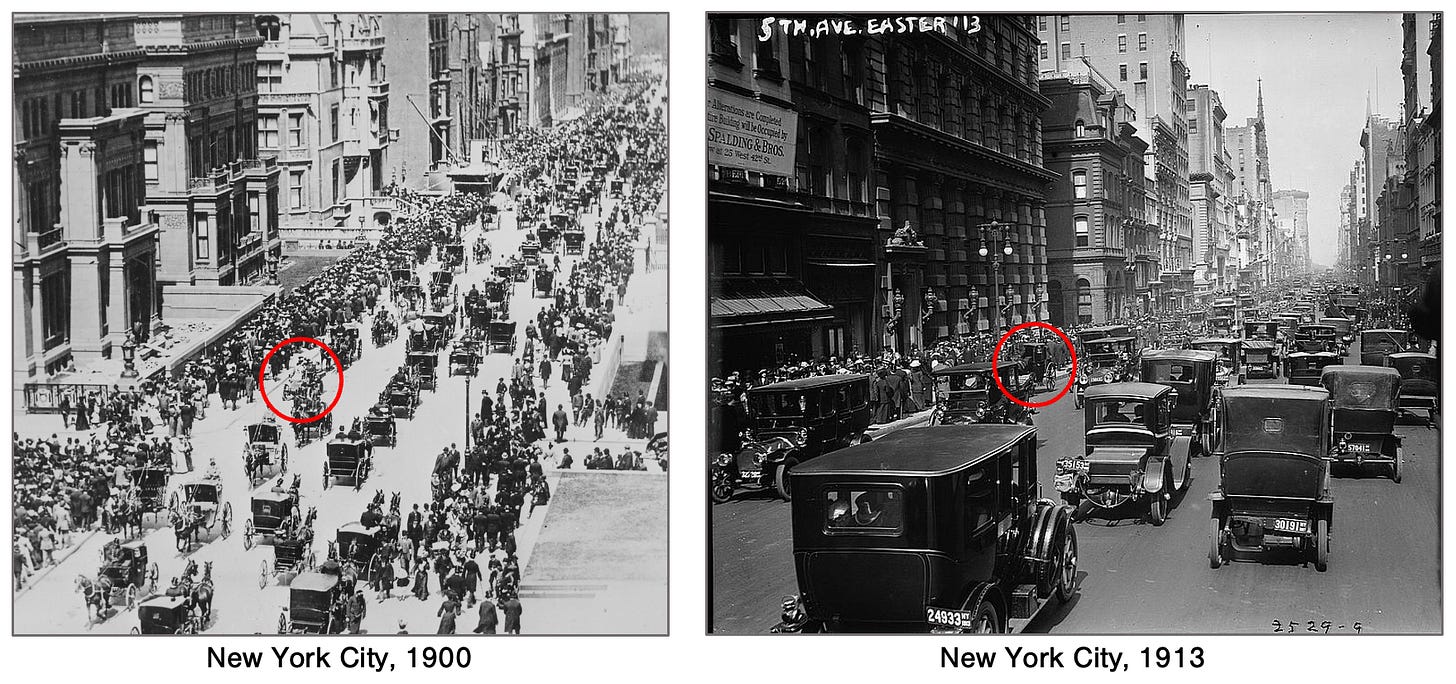

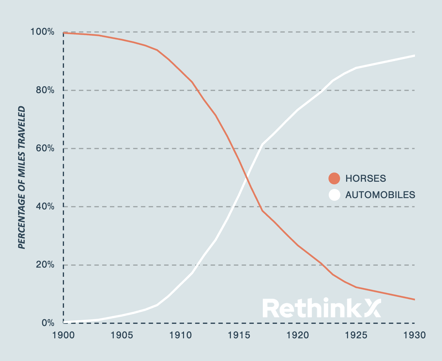

History is full of this kind of disruptions, compare for instance:

One car in 1900, one horse in 1913, developing like this:

These developments can move at lightning speed, and we think AI-powered humanoid robots will rapidly become much more capable and cheaper at the same time, making them an increasingly formidable competitor for an ever wider circle of tasks and even jobs.

Humanoid robotics

We’re now on the edge of a new displacement, humans by humanoid robotics. This is driven by a relentless decline in price and a relentless increase in the capacity of humanoid robots. On the former:

Sensors (cameras, tilt sensors, pressure sensors, microphones, accelerometers, etc.) to take in sensory data

Computer hardware and software to process sensory data with powerful AI

Actuators to move and interact with objects in the environment

Batteries and power electronics to provide energy for hours of sensing, computing, and moving

Each of these technologies has gotten dramatically cheaper and more powerful in recent years, setting the stage for the disruption of labor… Throughout history, every time technology has enabled a 10x or greater cost reduction relative to the incumbent system, a disruption has always followed. Each dollar spent on an automobile or an LED light bulb or a digital camera delivers more than 10 times the utility of each dollar spent on a horse, incandescent bulb, or film camera respectively.

MIT Technology Review called it one of the 10 breakout technologies for 2025. Roboticists marvelled at the way LLMs were fed massive amounts of data, turning it into useful insights, and started to work on doing the same for robotics:

One was figuring out how to combine different sorts of data and then make it all useful and legible to a robot. Take washing dishes as an example. You can collect data from someone washing dishes while wearing sensors. Then you can combine that with teleoperation data from a human doing the same task with robotic arms. On top of all that, you can also scrape the internet for images and videos of people doing dishes. By merging these data sources properly into a new AI model, it’s possible to train a robot that, though not perfect, has a massive head start over those trained with more manual methods. Seeing so many ways that a single task can be done makes it easier for AI models to improvise, and to surmise what a robot’s next move should be in the real world. It’s a breakthrough that’s set to redefine how robots learn.

The truth is simple and inexorable: while humanoid robots are getting better and cheaper, workers are getting more expensive.

With $20/h minimum wage for fast-food workers in California, a McDonald’s location in Fort Worth, Texas, is completely operated by robots already, and this is a few years ago (late 2022), which is a long time as technology is progressing at a tremendous speed. And McDonald’s is hardly alone, multiple fast food chains are experimenting.

There is the Café X coffee bar at Terminal 1 of San Francisco’s airport that is robot-operated (one of three locations), able to take 6 orders at a time and prepare over 1K different drinks. It’s open 24/7, which is another advantage of robotics.

For what we can make out, these are not robots driven by LLMs, they don’t learn by themselves and get better. They are pre-programmed to execute relatively simple tasks.

Robots are already widespread in warehouses, Amazon has 750K Digit robots, about one for every two employees, and in car manufacturing.

When robots can make the coffee, assemble the cars, and prepare and deliver the packages, suddenly we're talking about tens of millions of people whose jobs are in immediate danger.

Adding AI to the equation..

Robots are making a great leap forward when combined with LLMs. LLMs are already making inroads into businesses everywhere, with perhaps the most well-known examples in self-driving cars and chatbots used in customer service, but LLMs will dramatically speed up the progress of humanoid robots, in multiple ways:

Natural Language Understanding and Task Planning

LLMs enable robots to interpret complex verbal commands and generate step-by-step plans for long-horizon tasks. For example, robots can decompose instructions like "make a hot beverage" into subtasks (e.g., locating a kettle, pouring water) using code generation and environmental feedback.

Project GR00T leverages LLMs to translate high-level goals into actionable plans, allowing robots to reason about their environment and adapt instructions to dynamic scenarios.

Closed-Loop Interaction and Incremental Learning

LLMs enable real-time adaptation through feedback loops. Robots refine their actions based on execution results, sensor data, and human corrections. For instance, errors in object manipulation trigger code adjustments, improving future performance.

Incremental prompt learning allows robots to save improved interaction patterns in memory, enabling generalization to similar tasks without retraining, an example:

For instance, consider the interaction depicted in Figure 1. First, the user instructs the robot to help him clean the top of the fridge (1). The robot then executes several actions to hand over a sponge to the human (2). The user observes this insufficient result and gives instructions for improvement (“I also need a ladder”) (3), whereupon the robot performs corrective actions (4). If the desired goal is achieved, the user can reconfirm the correction (5), which leads to the robot updating its memory appropriately (6), thus incrementally learning new behavior based on language instructions.

Multimodal Perception and Coordination

Integrating LLMs with vision language models (VLMs) enhances environmental understanding. Robots fuse visual, auditory, and force-sensor data to navigate unstructured spaces and manipulate objects precisely, even under occlusion9.

Collaborative workflows between robots are streamlined via LLM-mediated communication, enabling tasks like object handoffs through shared situational awareness:

Unlike previous robot-control systems, Helix could perform long-horizon, collaborative and dexterous manipulation of objects “on the fly” without hours of training demonstrations or extensive manual programming, Figure said. The system has strong object generalization and can pick up unique household items not encountered in training by following natural-language prompts.

And from The Conversation:

A good example is an AI system built by Google called PaLM-E. Engineers behind the development of PaLM-E trained it to directly ingest raw streams of robot sensor data. The resulting AI system allowed the robot to learn very effectively… Advancements such as PaLM-E infuse humanoids with visual-spatial intelligence, allowing them to make sense of our world without requiring extensive programming.

Simulation-to-Reality Training

LLMs accelerate policy training in simulated environments using synthetic data. Robots pretrained in virtual settings transfer skills to the real world with minimal post-training, reducing development time.

GR00T-Mimic generates diverse motion trajectories from limited human demonstrations, enabling scalable skill acquisition.

Generalization and Foundation Models

Foundation models like GR00T N1 provide a base for generalist robot intelligence. These models support tasks ranging from grasping to multistep assembly, with post-training customization for specific applications.

LLMs enable cross-domain adaptability, allowing robots to apply learned skills in new contexts (e.g., domestic tidying to factory logistics).

Human-Robot Collaboration

LLMs facilitate natural dialog interfaces, letting non-experts train robots through verbal feedback. For example, correcting a misstep in a task updates the robot’s code for future executions.

Ethical and safety protocols are embedded via LLM-generated constraints, ensuring compliant behavior in human-centric environments.

Examples

There are plenty of examples, like

1X’s NEO Gamma, which autonomously tidies rooms by combining vision, language, and motion planning.

ARMAR-6 uses LLMs for high-level orchestration, enabling incremental learning from natural interaction.

Boston Dynamics’ Atlas leverages LLM-enhanced task planning for complex manufacturing operations.

There are plenty more, and we’ll introduce many of these in this series. Since AI is advancing rapidly, so are AI-driven humanoid robots, from Cio:

says Chris Nardecchia, CIO of Rockwell Automation. “With Grok-3 and other generative AI models, these robots will improve in situational awareness and decision-making. Since these AI models are advancing rapidly, I would expect that this will translate into all robots advancing quicker than ever.”

According to Gartner, by 2027 10% of all smart robots sold will be next-generation humanoid working robots.

This turnaround is not surprising, with Goldman Sachs Research, for example, predicting that the humanoid robot market could reach $38 billion by 2035 — a six-fold increase over earlier estimates.

Consequences

What if you combine dramatically enhanced capabilities with dramatically falling cost? Well, you got the potential for massive disruption. Fortunes will be made (and lost) and humans will have to cede more tasks, and then entire jobs and even large parts of segments, to robots, simply because they can no longer compete.

We’re in the very early innings of this, but we see this happening in multiple places already:

Robots are increasingly taking over manufacturing tasks, especially in China.

Robots have invaded warehousing and logistics.

Self-driving cars.

Customer service.

This will spread rapidly to other segments (education, healthcare, construction, etc.) Here are some of the main consequences:

The correct unit of analysis for this disruption is tasks, not jobs. Robots will perform specific tasks, and the cost-capability metric should be tasks per hour per dollar. Most jobs involve multiple tasks requiring different skills, and robots will initially perform narrower sets of tasks, with their capabilities growing over time. RethinkX projects the cost-capability of humanoid robot labor to be under $10/hour in 2025 already, falling to under $1/hour before 2035 and under $0.10/hour before 2045. This cost reduction, coupled with the ability of robots to work more than three times as many hours per week without breaks, makes them increasingly competitive.

The marginal cost of labor will rapidly approach zero, leading to new system properties and behaviors, similar to how the internet reduced the marginal cost of information.

As labor is an essential input, the falling cost of robot labor will lead to cheaper products and services across the entire global economy. Simultaneously, the quality of manufactured goods will tend to improve as robots can perform tasks with consistent precision and without the need for cost-cutting on quality. All this will lead to skyrocketing Productivity.

Available labor can now grow as fast as robots can be built and deployed, enabling entirely new applications and industries. Combining the relaxation of labor supply constraints and skyrocketing productivity will end scarcity and enable nations to massively expand their workforce and grow their economies. The cost of adding robots to the workforce is significantly lower and faster than raising and training human workers. Humanoid robotics has the potential to massively increase prosperity worldwide by expanding material abundance and making major social, economic, geopolitical, and environmental problems more solvable.

The disruption of labor by humanoid robots will accelerate the other foundational disruptions of energy, transportation, and food by making the manufacturing and deployment of these technologies cheaper and more efficient. Conversely, advancements in these other sectors will also aid in the development and adoption of humanoid robots.

Manufacturability will be critical for the widespread deployment of humanoid robots to minimize production costs and maximize volume. The humanoid form factor will dominate initial robotics applications as existing infrastructure and equipment are designed around it. It also facilitates data gathering for AI training and leverages the general-purpose capabilities of the human form.

Autocatalysis of humanoid robot production (using robots to manufacture more robots) will be key to accelerating their production and deployment.

While technological unemployment remains inevitable in the longer term, there will be an initial period where robots primarily meet latent demand for labor, creating a crucial planning window for a soft landing. Policymakers should use this time to prepare for the eventual widespread displacement of human labor rather than pretending it won't happen or trying to ban robots.

The demand for robots will be so great that many different firms will thrive simultaneously in the early years, targeting various market niches and using different business models.

It is crucial to protect people, not jobs, firms, or industries, during this disruption. Governments should avoid protecting incumbent interests at the expense of progress and the well-being of individuals affected by job displacement.

As a parting shot, here is Boston Dynamic’s new Atlas.

Fantastic thread!